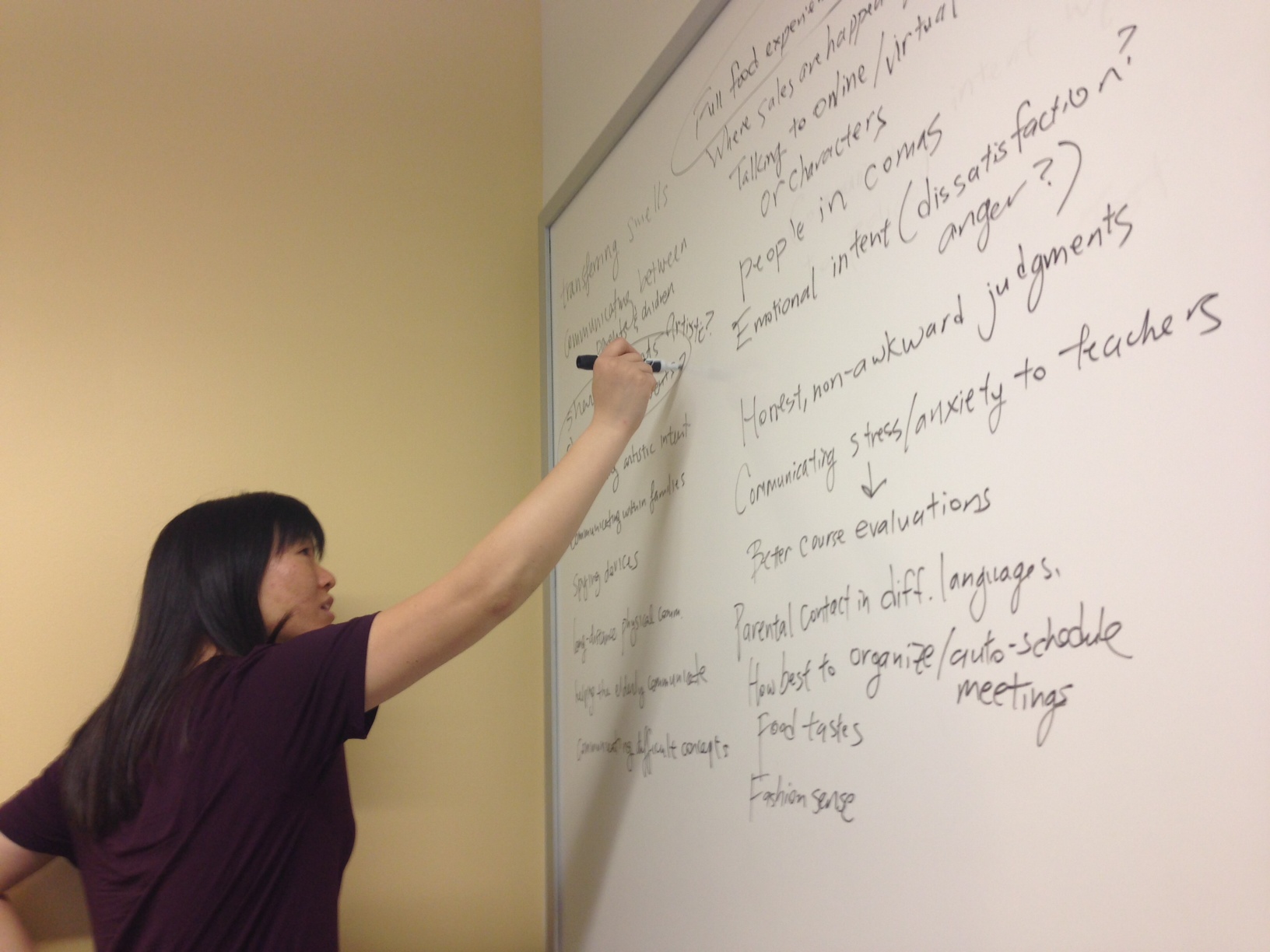

Ideation

Tagging notes on people in real life

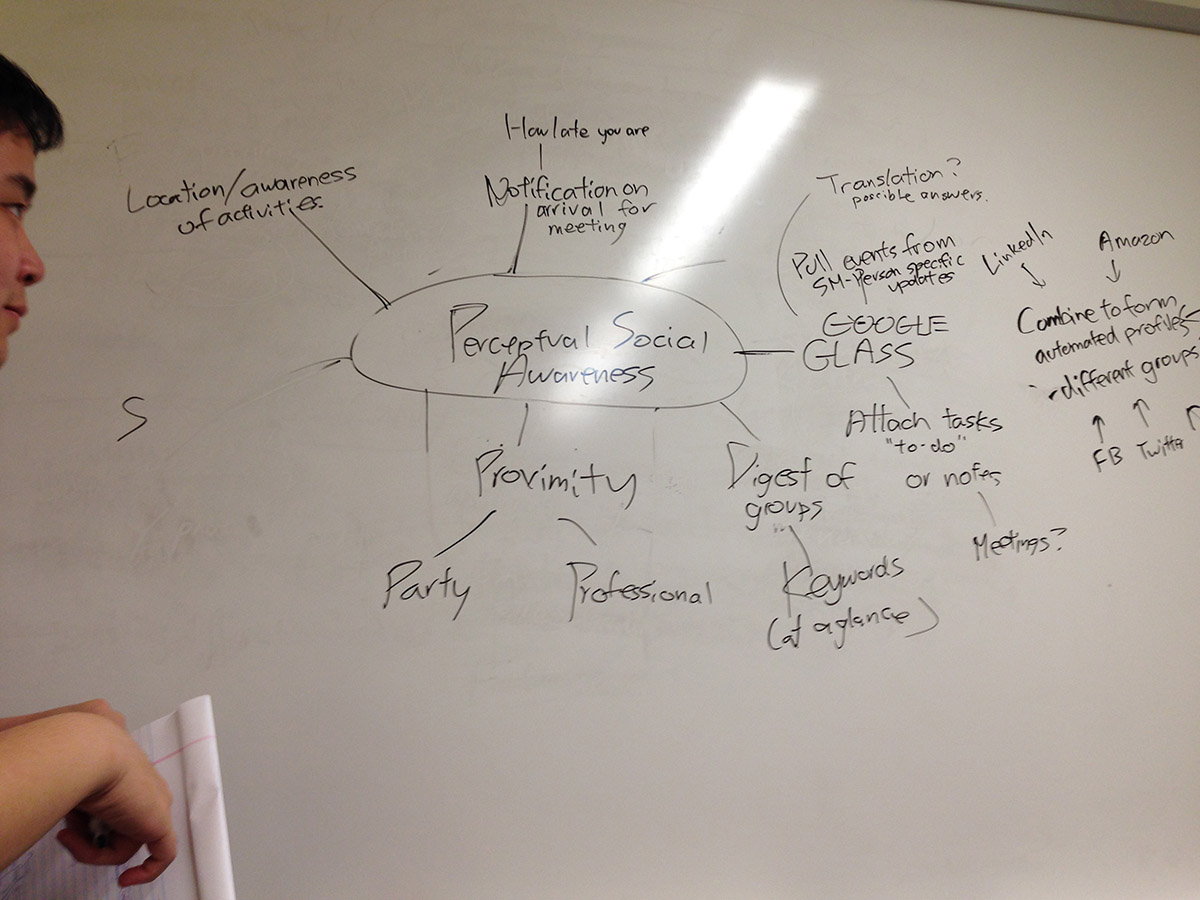

We have mental notes on people no matter where we go. For example, Christine is an extremely punctual person - be sure to be on time. Justin just got a new job at Facebook - be sure to congratulate him. Max asked me to fix a bug by Friday afternoon - remember to follow up with him. Sometimes these things slip from our minds during the meet ups, and this issue irks us when we remember it afterwards. We can type it in as a reminder on our phones, but it would be awkward to check it every now and then during meetings. However, I believe we can enter a world of augmented reality - a sort of heads-up display for our everyday life. With Google Glass and other wearable computers slowly spreading in market penetration, we have the ability to display these notes to ourselves on the go. By combining data from social networks with our own personal thoughts, we are able to tag people on the go with short notes. Users would be able to remind themselves to bring up topics during a meeting, or use statuses pulled from services such as Facebook or Twitter to springboard a conversation. In the end, the use cases are so wide and various, as each people prefer to “note” things their own way. This application would be targeted toward users of all ages, but currently toward users of wearable computing - a small population given the high costs today of obtaining one. In any case, such an application would address the need of making conversations more understandable and engaging by reminding users what needs to be done or suggesting topics to converse on.

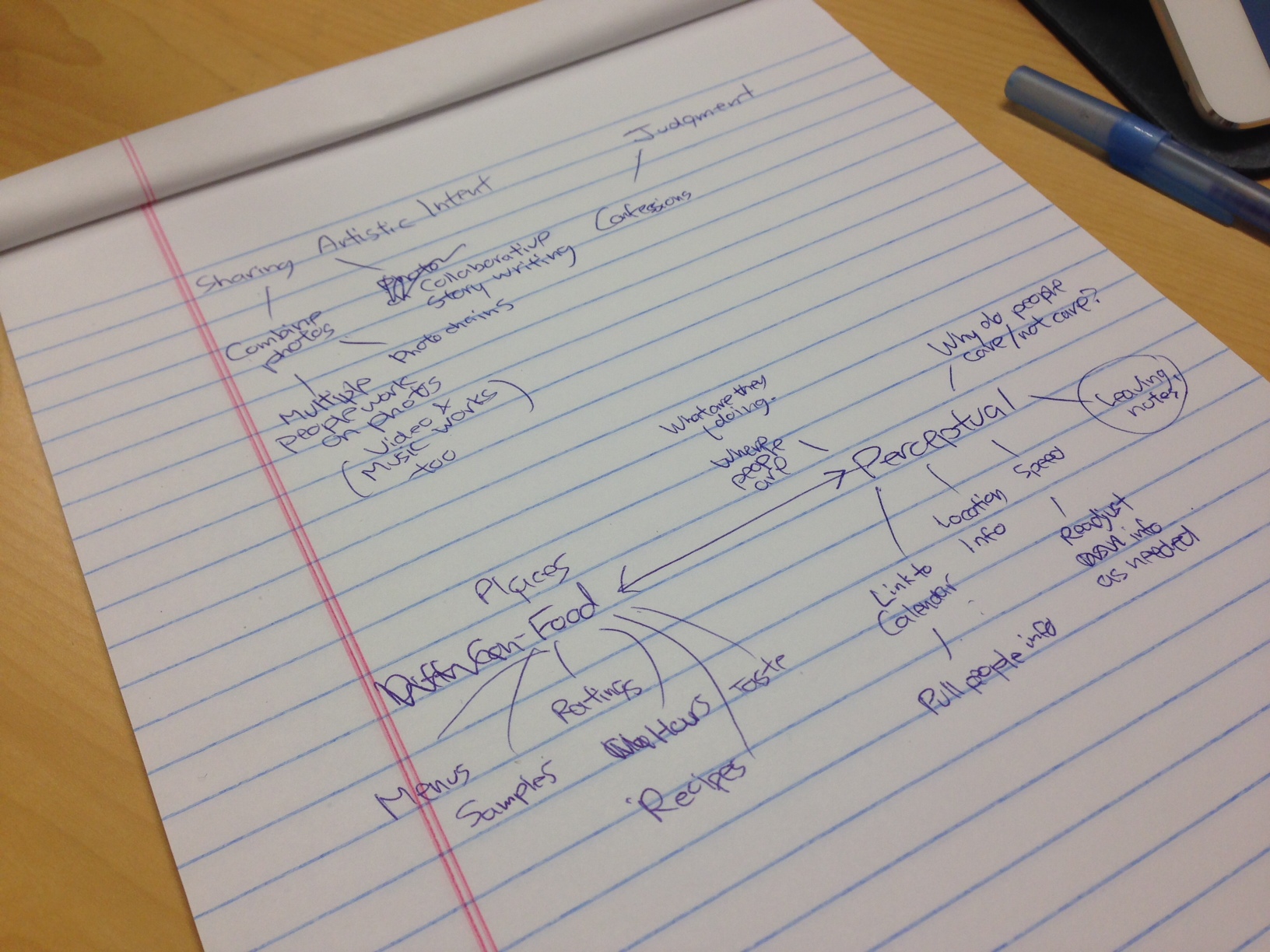

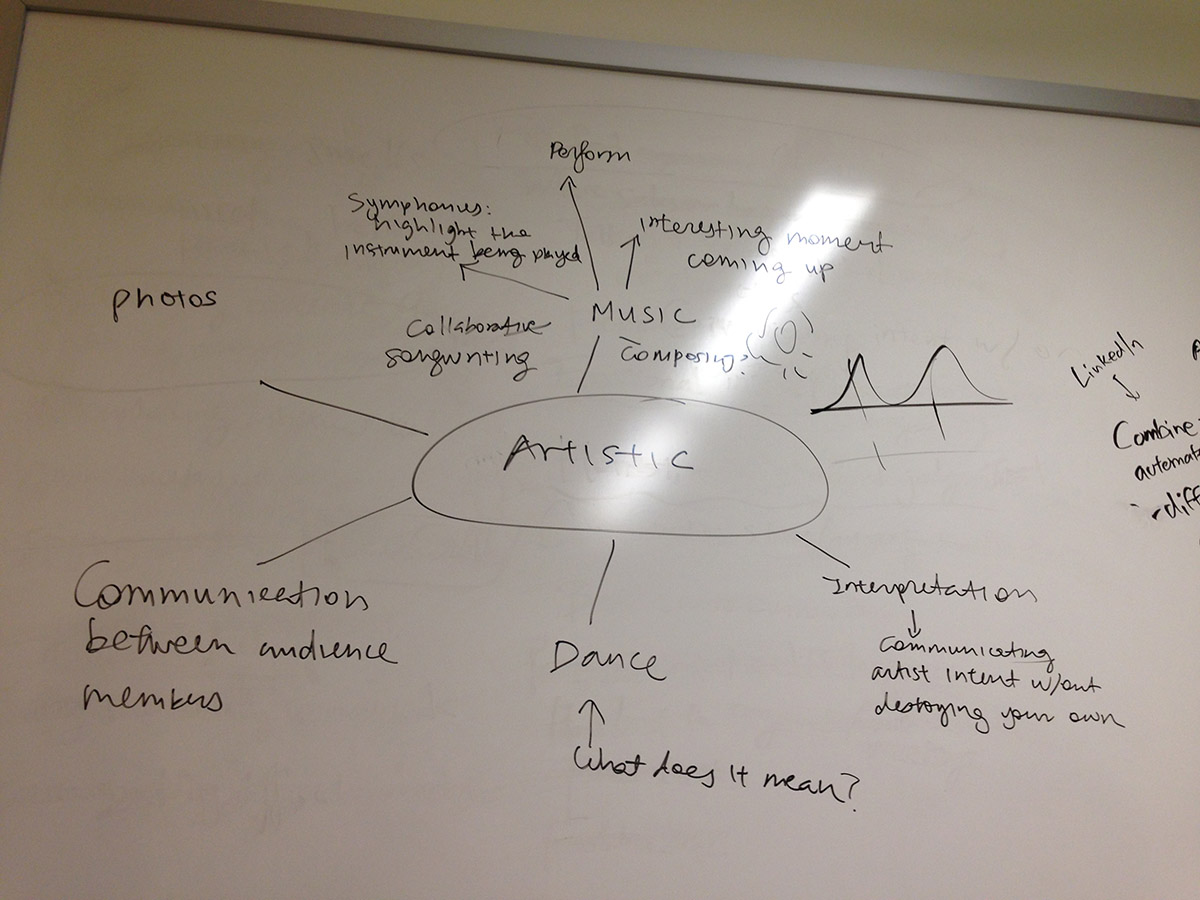

Communicating artistic intent

Performers have a message they want to convey to their audiences. Imagine the last live band you heard playing at a bar, or a poetry reading you walked into at a coffee house. Artists pour their hearts and souls into their performances, and they want the audience to come away with something -- but what, exactly? Right now, the standard is for artists to communicate their intent through meet-and-greets and post-performance Q & A’s, or at more formal performances, through artists’ notes. But the vast majority of the audience passing through casual shows at coffee houses and bars don’t know how or when to access this content. We want to create a mobile app that provides an easy interface for artists to communicate the message they want to send to their audience conveniently, at the right time. One can imagine a QR code on the tables in a coffee house that allows your phone to sync with the piece the artist is currently performing, so any audience member can immediately access artist notes about the current piece, and learn more about the artist. This application would be targeted to small, casual shows, rather than a formal audience, since cell phone use is generally unacceptable in more formal settings. As mentioned above, situations could include live music or readings in public settings like coffee houses, or even street performers who want their audiences to learn more about themselves in an easy, non-committal way. This would address the need artists have to communicate with their audiences (something they cannot currently do easily while performing), and the target audience would be independent artists and the audience members who watch (or simply walk into) these shows.

Digital mediums for communicating food experiences

Some of the biggest disappointments in restaurant dining happen because the reality of what you order is often very different from or does not match the expectation you created based on the menu. Most restaurant menus are currently comprised of some combination of ingredient listings, item descriptions, and photographs, but it is currently very difficult to accurately capture the full experience of what the dish is actually like. Diners have no way to know about the smell of a dish, the taste or the texture--all important components that make up the experience of eating something. We want to create a tablet application that uses recently available olfactory technology (like the Scentee and Chat Perf mobile accessories) that can convey to diners a more comprehensive vision of what their options are and what they may be ordering. This application would be targeted towards both restaurants and their clients to the ends of allowing the former to better communicate proper expectations to the latter. A menu item would have a description and a sumptuous photo as is common, but would also be able to emit aromas and perhaps include audio clips representative of texture (the crunch of apples or the crispness of flaky pastry). Given the functionalities available to the tablet, we also want to consider including short clips (perhaps only for restaurants with a more limited menu selection, so as not to make choosing too time-costly) perhaps of the food being cooked, broken apart, or cut into. We currently imagine this being most useful in a restaurant context, in which restaurant owners could reasonably justify purchasing large numbers of olfactory accessories that individual consumers may not have sufficient need for. Upon browsing the options, diners would be able to thoroughly explore the menu options and be able to tell immediately if the roast chicken smells like mom’s; if the spice aroma of the curry is too sharp; if the pastry sounds crusty instead of soft, the way they usually like it. Eating is not a purely visual experience, and these digital menus may be able to more effectively communicate the full integrity of what the restaurant is actually offering to the hungry consumer.

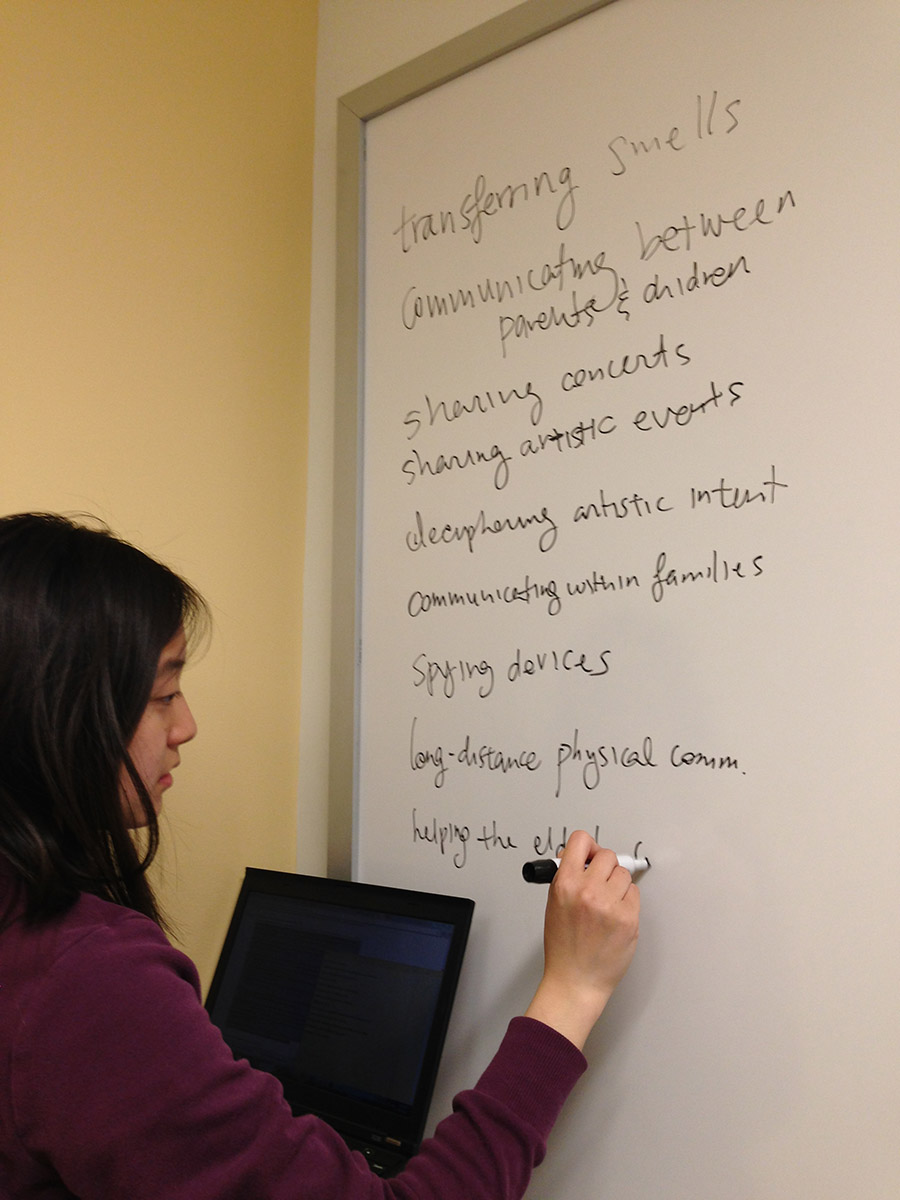

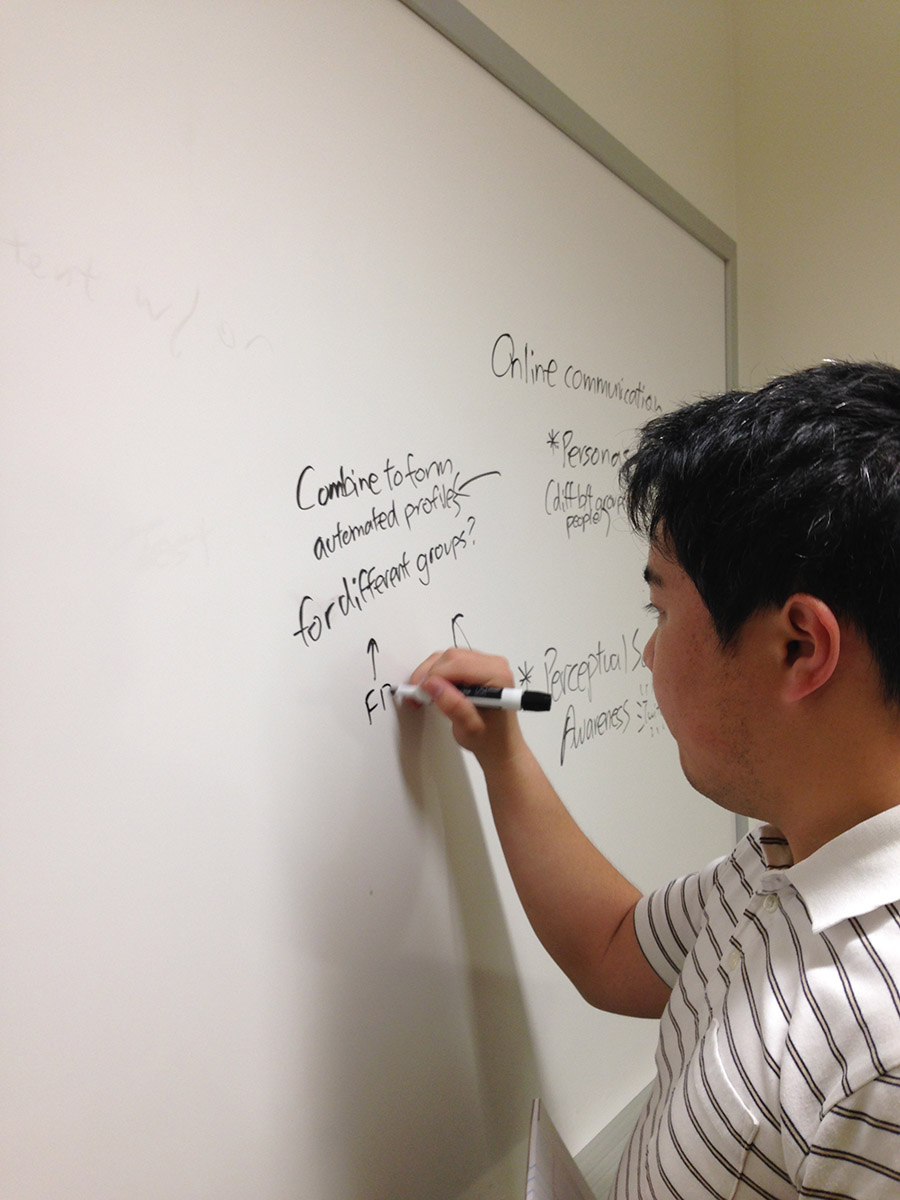

Selection of other ideas

- Lateness estimator: pull data from acclerometer / traffic / public transport to give a good estimate of when the other party is arriving

- Collaborative photo/music/story writing

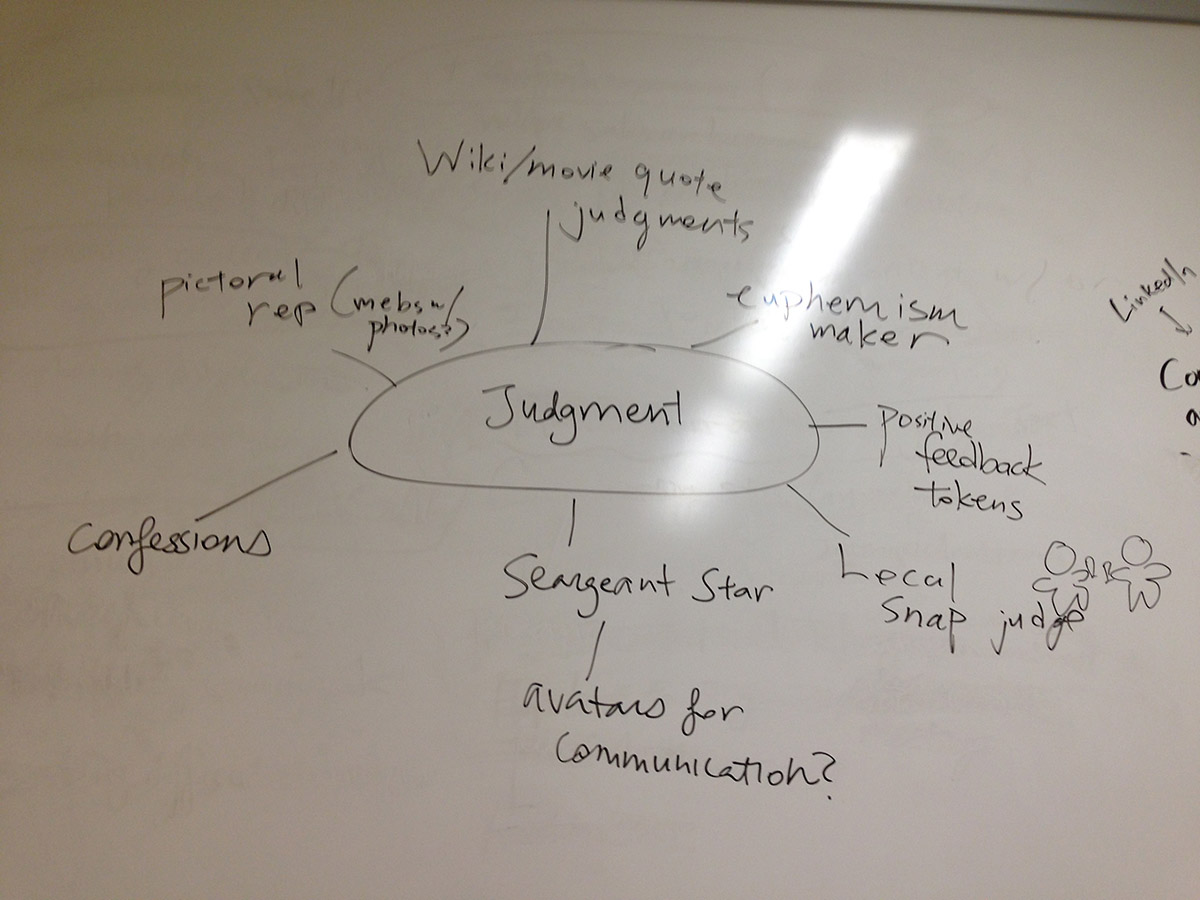

- Judgmentless communication through avatars

- Management of multiple online personas by summarizing each into a page

- Mobile food tours

- Food printers

Photos